Nvidia has announced the launch of next-generation AI supercomputer chips, such as OpenAI’s GPT-4, that will play a major role in future breakthroughs in deep learning and large language models (LLM). Nvidia recently introduced the HGX H200, a new high-end chip for AI work. The new GPU replaces the H100, which officially rocked Nvidia.

Nvidia has announced the launch of next-generation AI supercomputer chips, such as OpenAI’s GPT-4, that will play a major role in future breakthroughs in deep learning and large language models (LLM). Nvidia recently introduced the HGX H200, a new high-end chip for AI work. The new GPU replaces the H100, which officially rocked Nvidia.Nvidia HGX H200, the new peak in performance

Nvidia has announced its brand new H200 Hopper GPU from Micron, equipped with the world’s fastest HBM3e memory. In addition to new AI platforms, Nvidia also announced a major supercomputer acquisition with Grace Hopper Superchips, which now powers its Exaflop-class supercomputer called JUPITER.

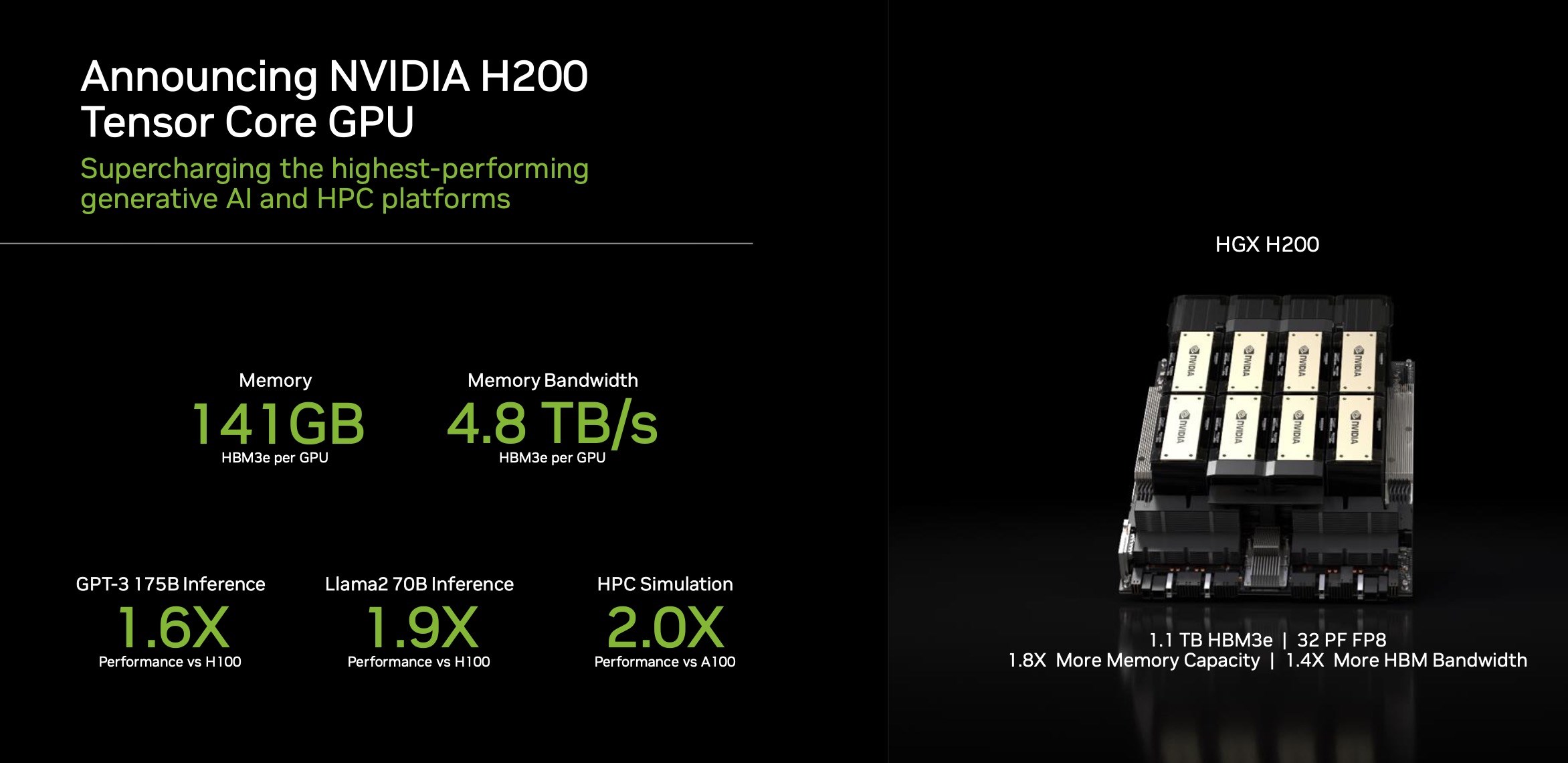

Nvidia’s H100 GPUs have been the most in-demand AI chips in the industry so far, but the green team wants to offer even more performance to its customers. HGX H200s, the latest HPC and compute platform for AI powered by H200 Tensor Core GPUs, come with the latest Hopper optimizations in both hardware and software and the world’s fastest memory solution.

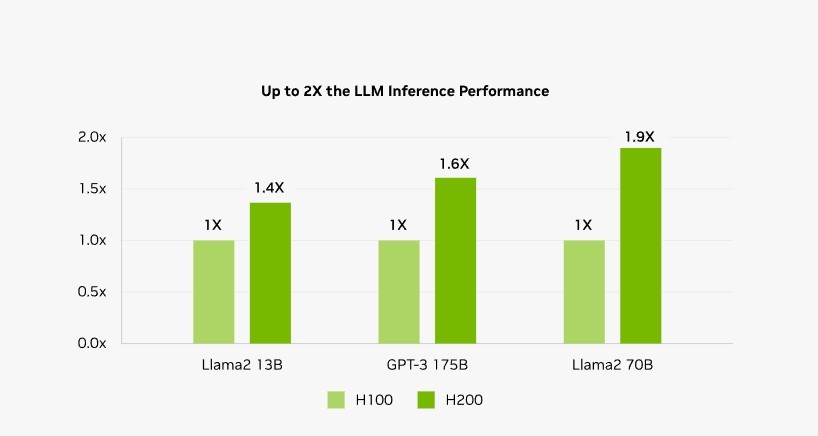

Nvidia H200 GPUs are equipped with Micron’s HBM3e solution, offering up to 141 GB of memory capacity and up to 4.8 TB/s bandwidth; This means 2.4 times more bandwidth and twice more capacity compared to the A100. This new memory solution allows Nvidia to nearly double AI inference performance compared to H100 GPUs in applications like Llama 2 (70 Billion parameters LLM). Recent advances in the TensorRT-LLM suite also provide major performance increases in many AI applications.

Nvidia H200 GPUs are equipped with Micron’s HBM3e solution, offering up to 141 GB of memory capacity and up to 4.8 TB/s bandwidth; This means 2.4 times more bandwidth and twice more capacity compared to the A100. This new memory solution allows Nvidia to nearly double AI inference performance compared to H100 GPUs in applications like Llama 2 (70 Billion parameters LLM). Recent advances in the TensorRT-LLM suite also provide major performance increases in many AI applications.Nvidia H200 GPUs will be available in a wide range of HGX H200 servers with 4- and 8-way GPU configurations. The 8-lane H200 GPU configuration in a single HGX system will deliver up to 32 PetaFLOPs of FP8 compute performance and 1.1TB of memory capacity.

Compatible with existing servers

The new GPUs will also be compatible with existing HGX H100 systems. In this way, those currently using the H100-based system will be able to make a quick upgrade by simply purchasing the new GPU. Nvidia partners including Asus, ASRock Rack, Dell, Eviden, Gigabyte, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Wiwynn, Supermicro, and Wistron will begin delivering updated solutions when H200 GPUs become available in Q2 2024.

The new GPUs will also be compatible with existing HGX H100 systems. In this way, those currently using the H100-based system will be able to make a quick upgrade by simply purchasing the new GPU. Nvidia partners including Asus, ASRock Rack, Dell, Eviden, Gigabyte, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Wiwynn, Supermicro, and Wistron will begin delivering updated solutions when H200 GPUs become available in Q2 2024.Nvidia did not provide any information about the pricing of the new chips, but it is certain that they will be expensive when they are released. Current H100s are estimated to sell for between $25,000 and $40,000 each. Even though the new H200 chips experience the main change on the memory side and owe the difference in performance to this, it is certain that the prices will be higher. Meanwhile, Nvidia will of course not pull the plug on the H100s, which are in great demand. The company aims to produce at least 2 million H100s next year.