Google announced the most powerful, scalable and flexible AI accelerator and new AI hypercomputer they have ever developed.

Google announced the most powerful, scalable and flexible AI accelerator and new AI hypercomputer they have ever developed.With the rapid development of the artificial intelligence market, companies continue to produce more powerful accelerators. Following products such as Microsoft’s Maia 100 and Amazon’s Trainium2 accelerator, Google also joins the list.

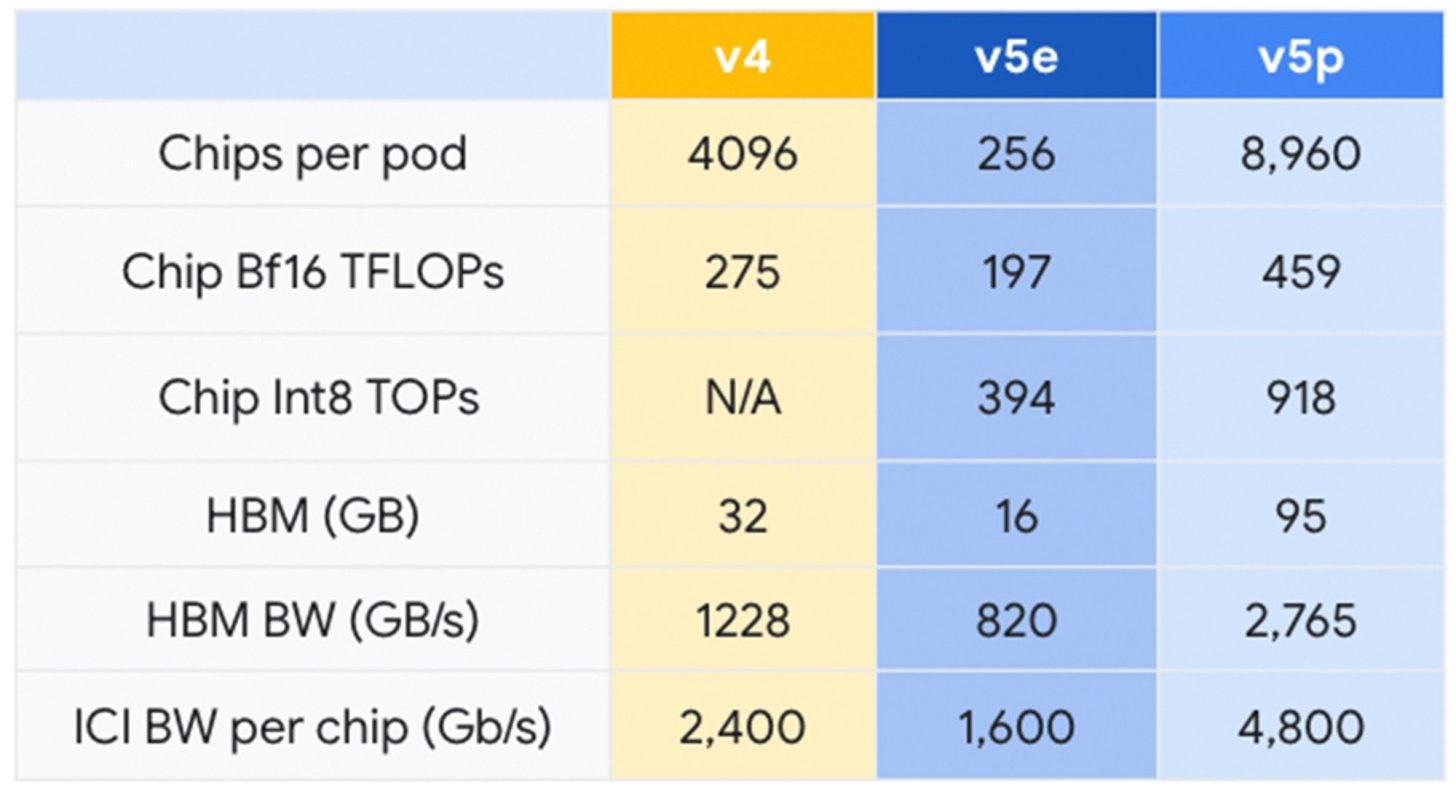

Announcing the new AI model Gemini, the company also introduced the new AI accelerator Cloud TPU v5p. The new TPU (Cloud Tensor Processing Unit) is Google’s most capable and cheapest accelerator. Each TPU v5p pod contains 8,960 chips. The connection between the chips is carried out at a huge speed of 4,800 Gbps for each chip.

2.8 times faster in training large language models

Compared to the TPU v4, the new v5p offers twice the FLOPS (floating point operations per second) and 3 times the memory bandwidth. Moreover, it offers 2.8 times faster speed in large language model training (LLM).

To compare the Google Cloud TPU v5p AI accelerator with the previous TPU v4:

- 2x more Flops (459 TFLOPs Bf16 / 918 TOPs INT8)

- 3 times more memory capacity (95 GB HBM)

- 2.8x faster large language model training

- 1.9x faster embedded model training

- 2.25 times more bandwidth (2765 GB/s vs 1228 GB/s)

- 2X inter-chip link speed (4800 Gbps vs 2400 Gbps)

Google must have realized the value of having good hardware and software, so it developed an AI hypercomputer to run modern AI workloads properly. To achieve this performance, the hypercomputer can optimize its performance according to the processing load.

Not wanting to be left behind in the field of artificial intelligence, Google showed that it is in the race by making important announcements in terms of both software and hardware.