Today, while using devices such as phones and computers has become easy for everyone from 7 to 70, technology giants, which do not forget individuals with various disabilities, are in great competition to increase the number of accessibility features. Apple, one of the most successful brands in this regard, announced new accessibility features before May 18, World Accessibility Awareness Day.

One of the new features, Personal Voice, offers users at risk of losing their ability to speak an easy and safe way to create a voice similar to their own. The Live Speech feature is designed to support people who cannot speak or have lost the ability to speak.

New accessibility features make life easier

Assistive Access

- The Assistive Access feature offers a personalized experience for the Phone and FaceTime apps, as well as the Messages, Camera, Photos, and Music apps, combined in a single app called Calls.

- Making the interface easily distinguishable with high-contrast buttons and large-font text labels, Assistive Access also includes tools to help trusted promoters deliver a personalized experience with support.

- Assistive Access uses design innovations to alleviate cognitive load, disaggregating apps and experiences and revealing their key features.

- The feature, which emerged as a result of feedback from people with cognitive disabilities and their trusted supporters, focuses on activities that these people enjoy and that are at the core of iPhone and iPad.

Live Speech

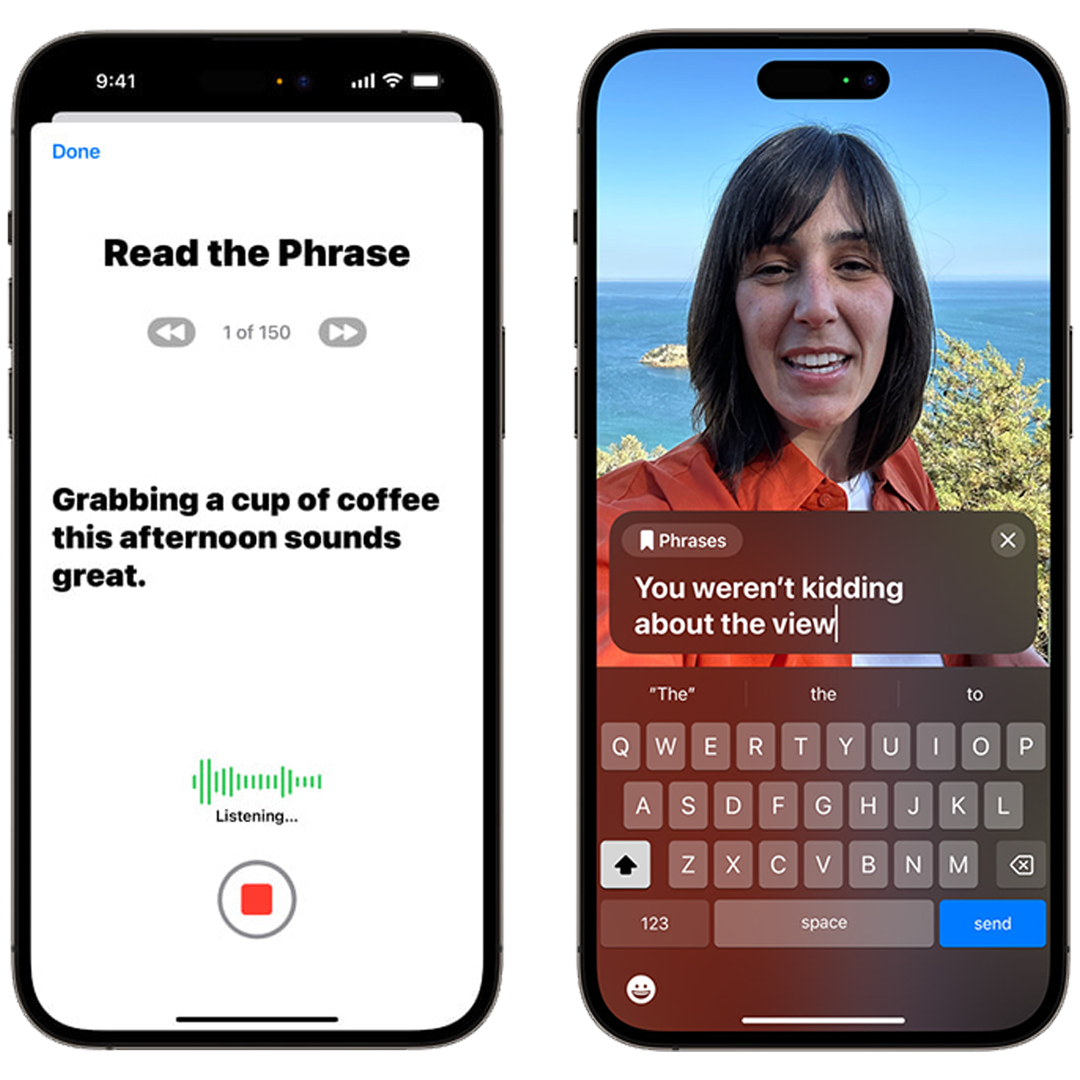

- With the Live Speech feature on iPhone, iPad and Mac, users write what they want to say both during phone and FaceTime calls and in individual chats, and these texts are read aloud.

- Users can also save frequently used phrases so they can respond quickly when chatting with friends, family, and coworkers.

- The Live Speech feature is designed to support millions of people around the world who are speechless or unable to speak.

Personal Voice

- Personal Voice feature offers users at risk of speech loss an easy and safe way to create a voice similar to their own

- The feature is designed for users who have recently been diagnosed with ALS or another medical condition that is rapidly progressing that affects their ability to speak.

- Users can create a Personal Voice with 15 minutes of audio recording by reading randomly generated text on iPhone or iPad.

- Leveraging on-device machine learning technologies to keep users’ information private and secure, the Personal Voice feature seamlessly integrates with Live Speech, allowing users to connect with loved ones through their own Personal Voice.

Point and Speak

- The Point and Speak feature in Magnifier makes it easy for visually impaired users to interact with physical objects containing various text labels.

- For example, the Point and Speak feature, which combines data from the Camera app, LiDAR Scanner, and in-device machine learning technologies when using a power tool such as a microwave oven, speaks out the texts on each button as the user moves their finger on the keypad.

- Point and Speak is built into Magnifier on iPhone and LiDAR Scanner on iPad.

- The Point and Speak feature can also be used in conjunction with other Magnifier features such as Person Detection, Door Detection and Image Annotations that help users navigate their physical environment.