Anthropic CEO Dario Amodei, Chinese rival Deepseek’in R1 model that has a major impact on biological weapon safety, and the model’s “worst” performance, he said. Amodei’s concerns seem to be much more serious than concerns about Deepseek’s referenced user data to China.

Anthropic CEO Dario Amodei, Chinese rival Deepseek’in R1 model that has a major impact on biological weapon safety, and the model’s “worst” performance, he said. Amodei’s concerns seem to be much more serious than concerns about Deepseek’s referenced user data to China.Biological weapon danger

Amodei stated that Deepseek produced rare information about biological weapons in a security test by Anthropic: “The worst of the models we have tested so far. There was absolutely no obstacles against producing this information. ” Amodei said Anthropic tested various artificial intelligence models regularly to assess national security risks. In these tests, it is checked whether models produce information about biological weapons that are not easily found in Google or textbooks.

Amodei said that he did not think that Deepseek’s models were “fully dangerous ında in providing rare and dangerous information today, but he could be in the near future. Although he praised Deepseek’s team as “talented engineers ,, he advised the company to“ take these artificial intelligence safety seriously ”.

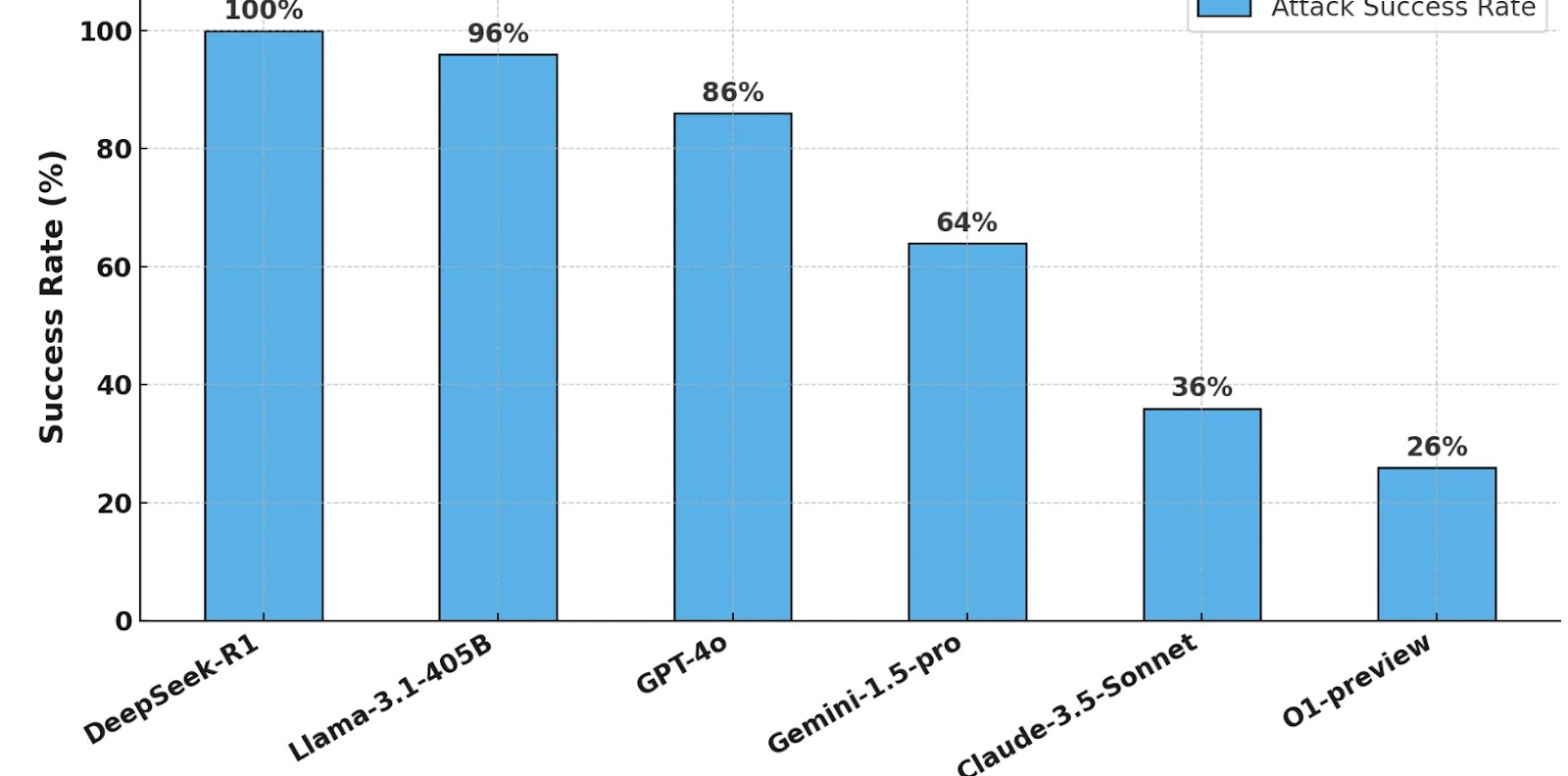

The rise of Deepseek has brought other security concerns. Cisco security researchers reported that last week that Deepseek R1 failed to prevent harmful commands in security tests and provided 100 percent Jailbreak success. Although Cisco does not make a statement about biological weapons, Deepseek has produced harmful information about cyber crimes and other illegal activities. However, it should be remembered that Meta’s Llama-3.1-405B and OpenAI’s GPT-4 have high failure rates of 96 %and 86 %, respectively.

The rise of Deepseek has brought other security concerns. Cisco security researchers reported that last week that Deepseek R1 failed to prevent harmful commands in security tests and provided 100 percent Jailbreak success. Although Cisco does not make a statement about biological weapons, Deepseek has produced harmful information about cyber crimes and other illegal activities. However, it should be remembered that Meta’s Llama-3.1-405B and OpenAI’s GPT-4 have high failure rates of 96 %and 86 %, respectively.