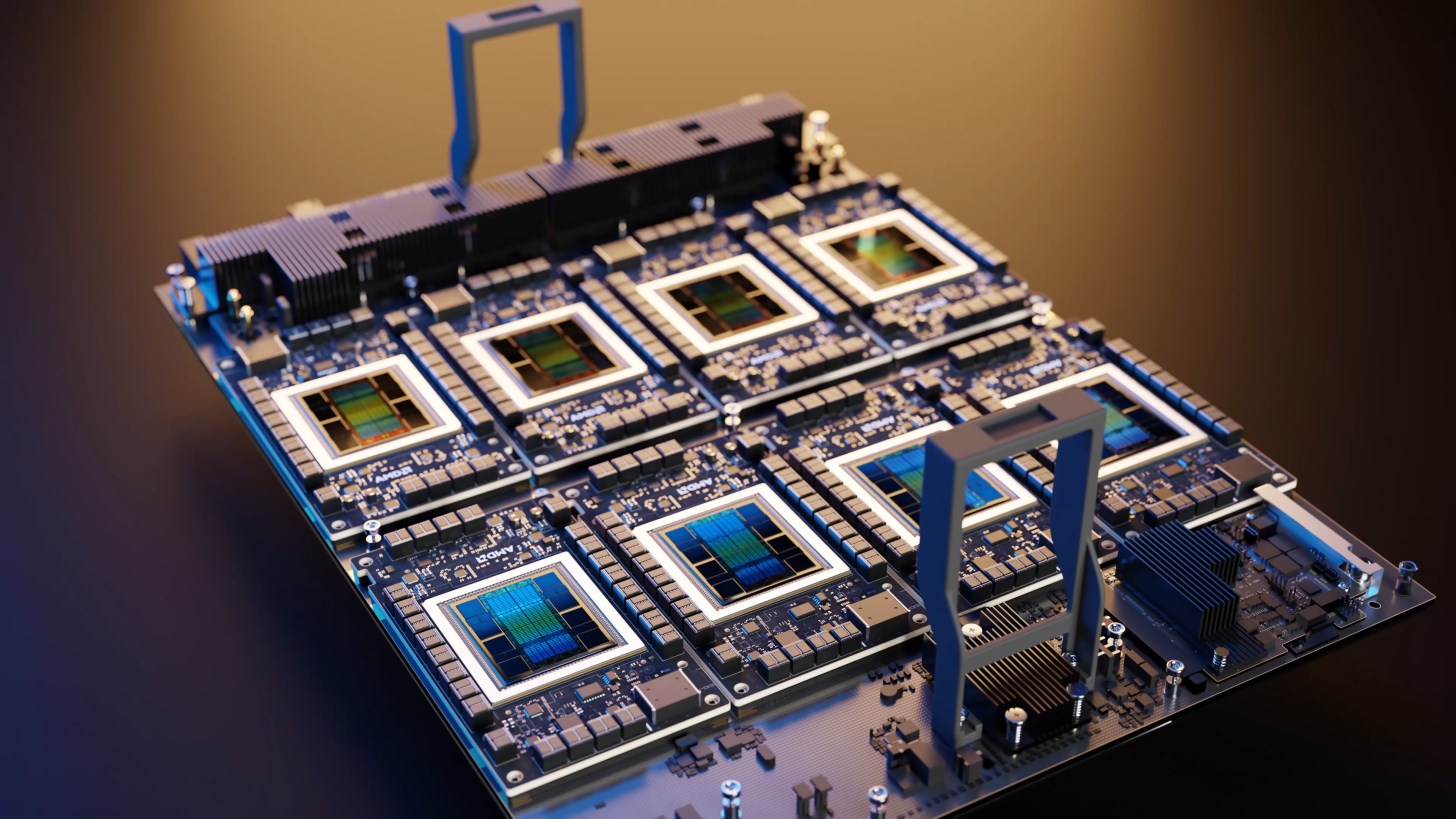

AMD will introduce the new generation Instinct accelerator family, codenamed MI300, in full detail at its artificial intelligence-centered event to be held on December 6. This new family of GPU and CPU accelerators will be the leading product in the AI segment, which is currently AMD’s number one and most important strategic priority, finally delivering a product that is not only advanced but also designed to meet the critical AI need in the industry. Using TSMC’s advanced packaging technologies, this series will also be chiplet-based. AMD Instinct MI300X and MI300A will be some of the most anticipated accelerators in the artificial intelligence segment, which will be introduced next month.

AMD will introduce the new generation Instinct accelerator family, codenamed MI300, in full detail at its artificial intelligence-centered event to be held on December 6. This new family of GPU and CPU accelerators will be the leading product in the AI segment, which is currently AMD’s number one and most important strategic priority, finally delivering a product that is not only advanced but also designed to meet the critical AI need in the industry. Using TSMC’s advanced packaging technologies, this series will also be chiplet-based. AMD Instinct MI300X and MI300A will be some of the most anticipated accelerators in the artificial intelligence segment, which will be introduced next month.AMD Instinct MI300X could break Nvidia’s AI edge

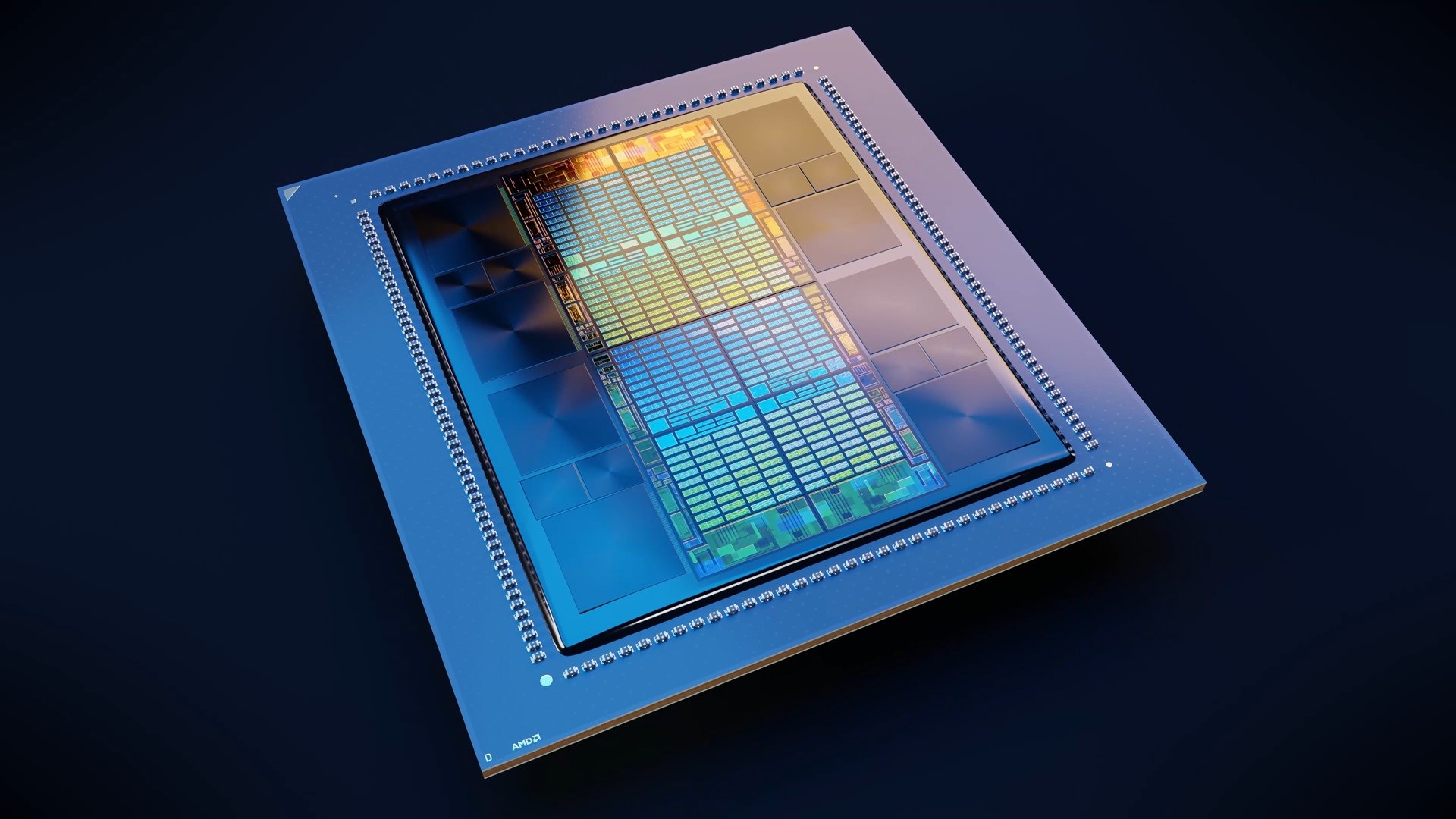

The AMD Instinct MI300X is sure to be the most talked about solution as it clearly targets NVIDIA’s Hopper and Intel’s Gaudi accelerators in the AI segment. Designed on the CDNA 3 architecture, the chip will host a mix of 5nm and 6nm IPs. In this way, a total of 153 billion transistors will be produced.

Instinct MI300X will host HBM3 memory on top of the Infinity Fabric solution. Based on the CDNA 3 GPU architecture, each GCD will have a total of 40 compute units, equal to 2560 cores. Since there will be eight compute dies (GCD) in total, we’re ultimately looking at a total of 320 compute units, or 20,480 cores.

Instinct MI300X will host HBM3 memory on top of the Infinity Fabric solution. Based on the CDNA 3 GPU architecture, each GCD will have a total of 40 compute units, equal to 2560 cores. Since there will be eight compute dies (GCD) in total, we’re ultimately looking at a total of 320 compute units, or 20,480 cores.Instinct MI300X will also differ significantly from its predecessor on the memory side. While the MI250X has 128 GB of memory, this capacity will be increased by 50 percent to a total of 192 GB. To achieve this capacity, AMD equips the MI300X with 8 HBM3 stacks, with each stack providing 24 GB capacity. However, the memories will provide MI300X with up to 5.2 TB/s bandwidth and 896 GB/s Infinity Fabric bandwidth.

NVIDIA’s upcoming H200 AI accelerator will offer 141 GB capacity, while Intel’s Gaudi 3 will offer 144 GB capacity. Large memory pools are very important for large language models (LLM), which are mostly memory bound. For comparisons:

- Instinct MI300X – 192 GB HBM3

- Gaudi 3 – 144 GB HBM3

- H200 – 141GB HBM3e

- MI300A – 128GB HBM3

- MI250X – 128GB HBM2e

- H100 – 96GB HBM3

- Gaudi 2 – 96 GB HBM2e

AMD seems to be pretty good on the power consumption side as well. The MD Instinct MI300X will have 750W power consumption, a 50% increase compared to the 500W of the Instinct MI250X. It will consume 50W more power than Nvidia’s new H200 GPU.

Exascale APUs coming with AMD Instinct MI300A

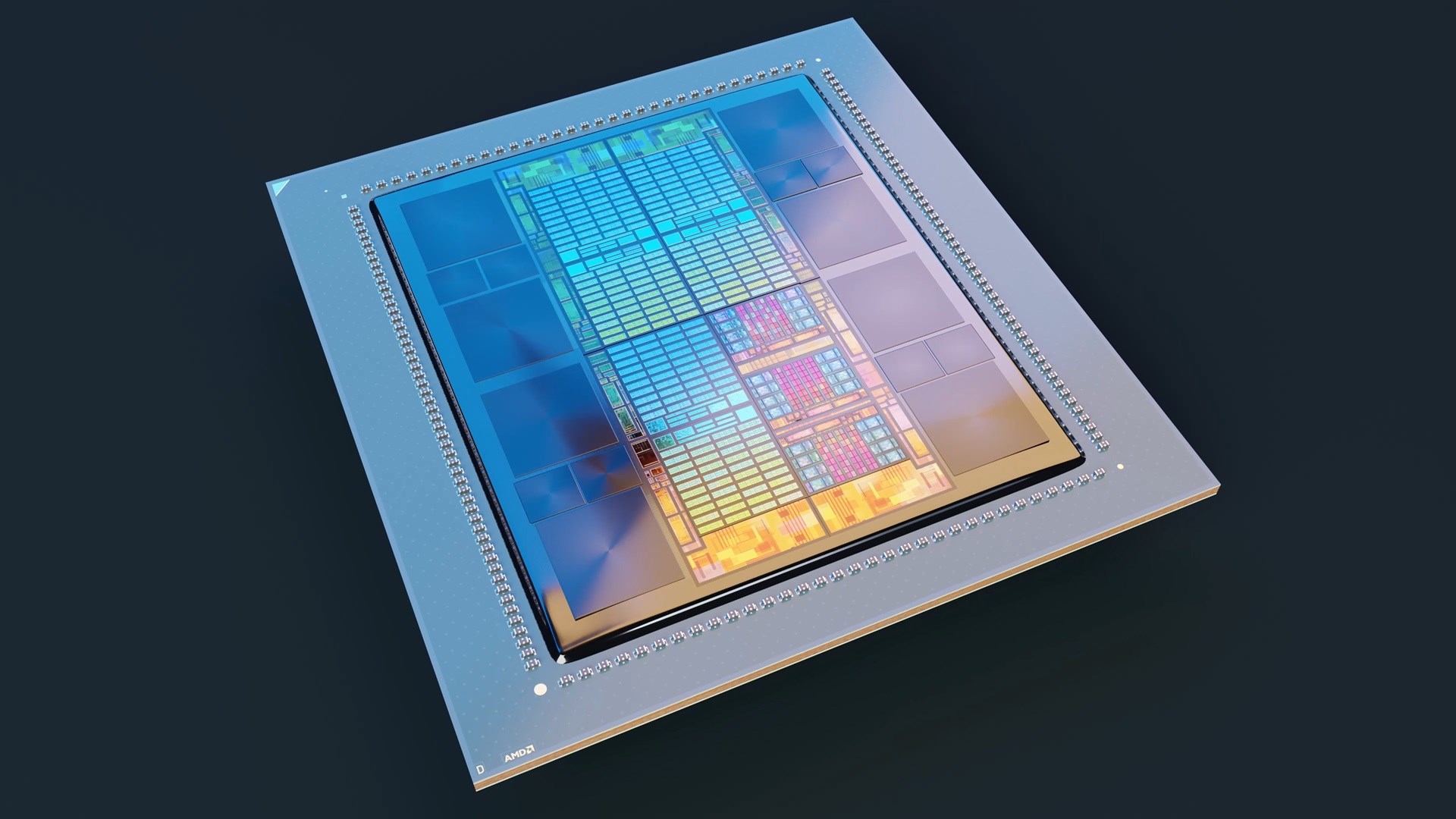

AMD has been working on APU solutions for a long time. Although not on the individual side, on the corporate side, AMD has been expected to introduce an Exascale class APU for years. It looks like the wait will be over with the Instinct MI300A. The Instinct MI300A is very similar to the MI300X, except that it uses TCO-optimized memory capacities and Zen 4 cores.

AMD has been working on APU solutions for a long time. Although not on the individual side, on the corporate side, AMD has been expected to introduce an Exascale class APU for years. It looks like the wait will be over with the Instinct MI300A. The Instinct MI300A is very similar to the MI300X, except that it uses TCO-optimized memory capacities and Zen 4 cores.The two GCD dies found in its sibling have been removed and replaced with three Zen 4 CCDs that offer their own separate cache and core IP pools. There are 8 cores and 16 threads per CCD, giving a total of 24 cores and 48 threads on the active die. There is also 24 MB L2 cache (1 MB per core) and a separate cache pool (32 MB per CCD). Other details are quite similar.

AMD Instinct MI300 accelerators in brief

- The first integrated CPU+GPU package

- Exascale targets the Supercomputer market

- AMD MI300A (Integrated CPU + GPU)

- AMD MI300X (GPU Only)

- 153 billion transistors

- Up to 24 Zen 4 cores

- CDNA 3 GPU architecture

- HBM3 Memory up to 192 GB

- 5nm+6nm process

Instinct MI300 accelerators will be a big step for AMD, but competitors are not sitting idle. Nvidia started using HBM3e solutions on the memory side with the H200, but did not put much on top of the existing H100. However, Nvidia is preparing Blackwell GPUs for 2024 and this family will be coming with major upgrades. On December 6, AMD will announce the Instinct MI300 family in full detail.